Understanding the Difference Between Generative and Discriminative AI—and Why It Matters in ABA

You don’t need to understand algorithms or advanced math to get value from this blog. What you do need is a working understanding of what these systems do, how they behave in practice, and where they can help, or harm, your work.

As artificial intelligence (AI) continues to reshape clinical, educational, and organizational settings, professionals across the behavioral sciences, particularly Board Certified Behavior Analysts (BCBAs), face increasing pressure to evaluate and implement these technologies ethically and effectively. But not all AI is built the same. Some systems generate new content; others categorize and predict outcomes based on existing data. These differences matter deeply for clinical utility, ethical decision-making, and the design of workflows in applied behavior analysis (ABA).

To engage with artificial intelligence responsibly, behavior analysts must first understand the difference between generative and discriminative models. Knowing this distinction isn’t just a technicality. It’s foundational to ethical and effective practice. Your ability to evaluate AI tools depends on this core literacy, whether you’re supervising RBTs, navigating documentation workflows, or making organizational decisions..

In this post, we’ll unpack what generative and discriminative models are; how they differ in function, risk, and review method; and what this means as we integrate behavior analysis and artificial intelligence.

Two Types of AI You’re Already Seeing in ABA Tools

Let’s consider two practical examples in a clinical ABA setting:

- Generative AI Example: You record a mock session and upload the transcript to a “session note generator.” The system produces a polished SOAP note, complete with treatment recommendations, in your writing style.

- Discriminative AI Example: You upload the same transcript plus some historical data to a “goal achievement classifier” that predicts whether the client mastered the skill during that session, or if more practice is needed in future sessions. It doesn’t rewrite or create text—it decides between categories based on patterns in the data.

Both are “AI,” but they operate under different principles.

What Is Generative AI?

Generative AI refers to systems that create new data based on learned patterns. These models are often used to generate text (like ChatGPT, Gemini, Claude), images, music, and more.

-

How it works: Generative AI learns the joint probability of inputs and outputs: P(x, y) [read as, “The probability that x and y both occur”]. That means the models learn how certain inputs and outputs tend to occur together in the training data, even if the reasons for cooccurrence and the conditions under which cooccurrence can’t be described explicitly.

- Behavior Analytic Analogy: Like a skilled RBT writing a fictional “sample session note” from scratch after years of reading thousands of real notes. They’ve never seen this exact session, but they can produce something plausible based on patterns they’ve learned over time, even if they can’t vocally describe what those patterns are.

- Behavior Analytic Analogy: Like a skilled RBT writing a fictional “sample session note” from scratch after years of reading thousands of real notes. They’ve never seen this exact session, but they can produce something plausible based on patterns they’ve learned over time, even if they can’t vocally describe what those patterns are.

-

Common ABA examples:

-

Drafting SOAP notes

-

Creating social stories from prompts

-

Writing session summaries or treatment reports

-

-

Risks:

-

Can “hallucinate” details not present in the real data.

-

May embed subtle bias in language (“oppositional,” “noncompliant”) if that’s common in the training set because those words tend to co-occur with the language in the prompt you passed.

-

What Is Discriminative AI?

Discriminative AI refers to systems that predict or classify outcomes based on input data. These models don’t generate new content—they decide between existing categories.

-

How it works: Discriminative AI learns the conditional probability of labels given input data P(y|x) [read as, “The probability of y given that x has occurred”]. This means it tries to learn the cleanest boundary between categories in the dataset.

- Behavioral analogy: Like a BCBA reviewing a skill acquisition graph and deciding whether the learner has met mastery criteria. No new content, just a judgment.

-

Common ABA examples:

-

A computer vision system that classifies an emitted response as “independent,” “prompted,” or “incorrect.”

-

A supervised machine learning system that predicts whether a client will master a target in the next two weeks.

-

A natural language processing and supervised machine learning system that flags insurance notes that fail to meet compliance criteria.

-

-

Risks:

-

Performance can drop drastically if the use case is different from the training data.

-

Can produce overconfident but wrong classifications without explanation.

-

This blog is a sample of our weekly newsletter, designed for ABA professionals who are building AI literacy skills. Subscribe here.

This blog is a sample of our weekly newsletter, designed for ABA professionals who are building AI literacy skills. Subscribe here.

Why the Difference Between Generative and Discriminative AI Matters for BCBAs

A common source of confusion and risk is when practitioners try to use generative AI tools to make decisions that require discriminative precision. Put simply, generative AI systems are not optimized for discriminative tasks. Asking a generative model to classify a behavior, determine goal mastery, or score performance can produce outputs that look confident but are structurally unfit for those tasks.

In clinical work, whether a tool is generative or discriminative changes:

- Validation Process

Generative AI outputs need content review for accuracy and completeness—like proofreading a session note.

Discriminative AI outputs need input alignment and accuracy evaluation—like checking that I am using a system trained on data similar to my own and checking prediction precision against historical data. - Accountability

With generative outputs, errors are often in what’s added or omitted (hallucinations, wrong details).

With discriminative outputs, errors are in what’s labeled (misclassification, bias). - Integration into Workflow

Generative AI is best for drafting, brainstorming, or reducing repetitive writing. It’s easier to edit than produce.

Discriminative AI is best for triage, decision support, or flagging anomalies. It’s easier to let computers do the complex math. - Ethical Implications

Generative tools may make things up that are contraindicated, not relevant, or incompatible with behavior analytic principles. Outputs need to be edited and validated.

Discriminative tools acting as classifiers need validation as reliable assessment instruments and the conditions under which perform well (or poorly) determined.

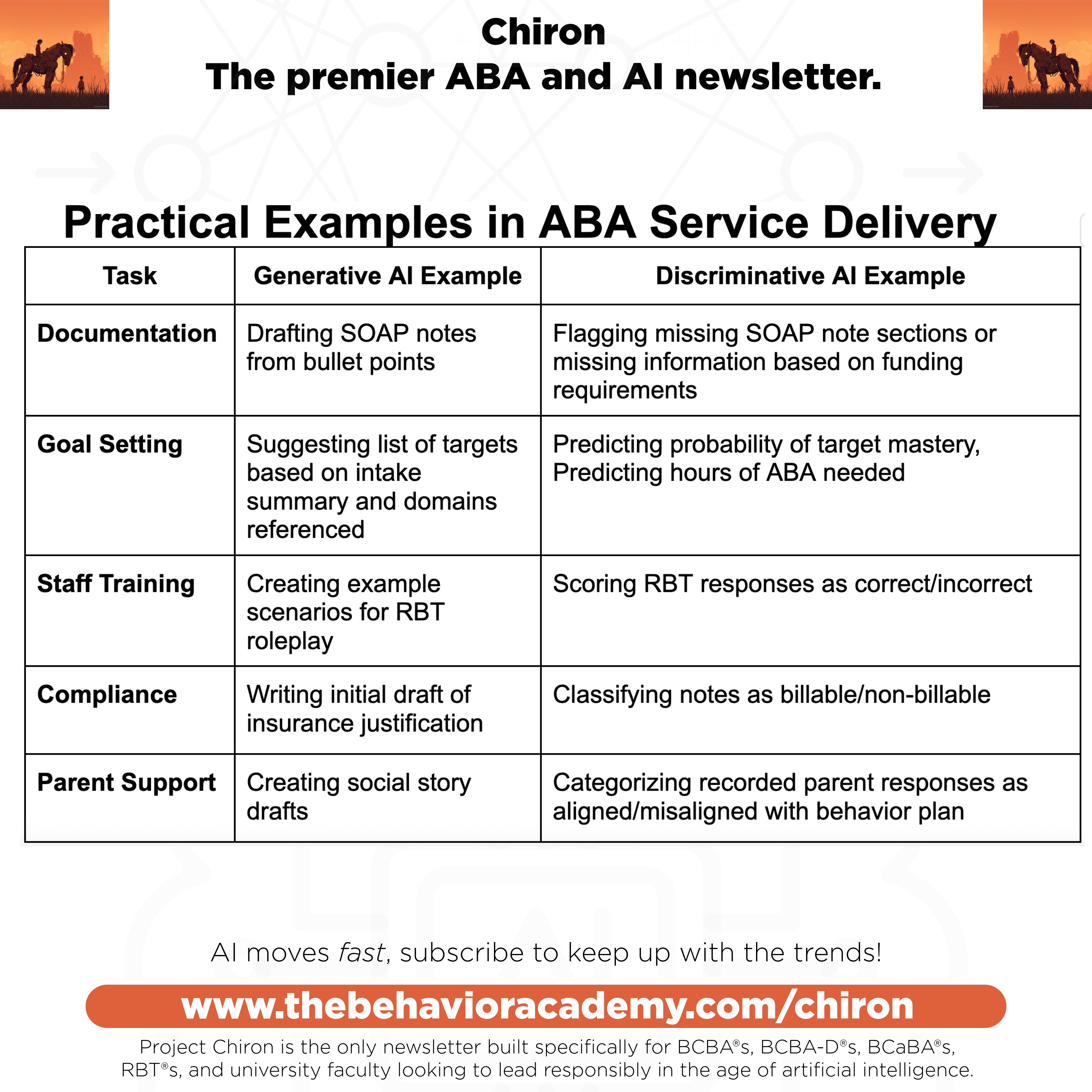

Behavior Analysis and Artificial Intelligence: Task-Specific Examples

Here’s how these two forms of AI play out across common behavior analytic tasks:

How to Choose the Right Tool for Your ABA Practice

If you’re evaluating or purchasing AI tools for clinical use, follow these three steps:

Step 1: Identify the function.

- Is this system creating new content → likely generative.

- Is it assigning a category or prediction → likely discriminative.

Step 2: Match the review method to the type.

- Generative → proofread for fidelity, ethics, and accuracy.

- Discriminative → check accuracy metrics, confusion matrices, and edge case performance.

Step 3: Check fit for your setting.

- If you need creativity and drafting speed, generative may fit—but requires vigilant review.

- If you need consistent scoring or classification, discriminative may fit—but requires representative training data.

Key Questions to Ask Before You Use an AI Tool in ABA

When evaluating an AI tool as a BCBA, ask yourself these questions:

-

Is it creating or classifying?

-

What kind of error would hurt the client most—wrong facts or wrong labels?

-

Do I possess the skills and processes to identify and correct those errors before they cause harm?

-

Does my consent process explain which type of AI is in use?

Take Action: Practical Steps for BCBAs and Clinical Leaders

-

Inventory Your Tools: Label each as generative or discriminative.

-

Pick One Output: Run a quality check matched to its type.

-

Educate Your Team: Share the table above and ask them to add their own examples.

-

Consent Form Update: Add language clarifying what type of AI is in use and how outputs are reviewed.

Ready to go deeper? Enroll in the Foundations of AI Literacy for Behavior Analysts course and earn 1.5 BACB® Ethics CEUs. Taught by Dr. David J. Cox, Ph.D., M.S.B., BCBA-D—an internationally recognized leader in behavioral data science—this course equips you with the critical thinking skills to assess AI tools ethically and effectively. Build the critical skills to evaluate AI tools confidently, ethically, and without needing a background in computer science. Earn 1.5 BACB® Ethics CEUs today, and unlock access to our advanced, role-specific tracks built for RBTs, BCBAs, Clinical Directors, Academics, and Organizational Leaders. Learn more or register here.

Final Thoughts: Why AI Literacy Belongs in Every BCBA’s Toolkit

As AI continues to shape the future of applied behavior analysis, understanding the foundational difference between generative and discriminative models is no longer optional. It’s core to professional competence, ethical practice, and client safety. Behavior analysis and artificial intelligence are converging. But tools don’t make ethical decisions—people do. If you delegate your judgment, don’t be surprised when the outcome reflects someone else’s values. Generative AI can be your creative ally; discriminative AI can be your reliable scout. But only if you know which one you’re dealing with—and play to its strengths while guarding its weaknesses.

Know your tools. Know your role. And play to both of their strengths.

About the Authors:

David J. Cox, Ph.D., M.S.B., BCBA-D is a full-stack data scientist and behavior analyst with nearly two decades of experience at the intersection of behavior science, ethics, and AI. Dr. Cox is a thought leader on ethical AI integration in ABA and the author of the forthcoming commentary Ethical Behavior Analysis in the Age of Artificial Intelligence. He’s published over 70 articles and books on related topics in academic journals and, quite possibly, the only person in the field with expertise in behavior analysis, bioethics, and data science.

Ryan O’Donnell, MS, BCBA is a Board Certified Behavior Analyst (BCBA) with over 15 years of experience in the field. He has dedicated his career to helping individuals improve their lives through behavior analysis and are passionate about sharing their knowledge and expertise with others. He oversees The Behavior Academy and helps top ABA professionals create video-based content in the form of films, online courses, and in-person training events. He is committed to providing accurate, up-to-date information about the field of behavior analysis and the various career paths within it.